GUIs and Pippi

One of the projects I’m working on now involves a lot of what I guess you’d call traditional sequencing: rhythms better expressed as pattern fragments than algorithms, pitches and other shapes that are more comfortable expressed on some kind of pianoroll-style grid than typed in a lilypond-style text format.

I used to love the reason UIs for this. The piano roll and drum sequencing GUIs had their limitations (mostly I wanted a fluid grid and more flexibility for working with polyrhythms) but they were really useful UIs.

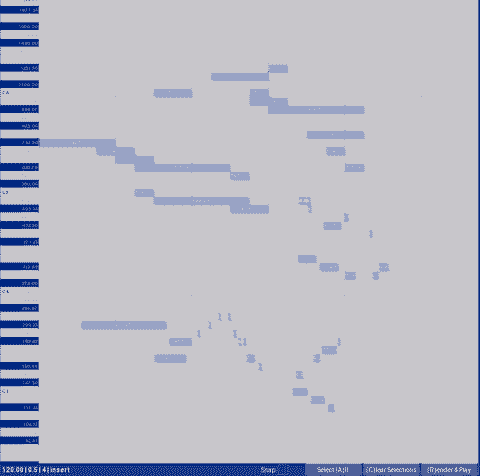

Last year I decided OK, a piano roll GUI would be a really useful component to have in the projects I’m working on with pippi. Working with MIDI as an intermediary format wasn’t very attractive, so I decided to start working on my own.

It’s not as polished as the reason GUI (for example I have yet to implement dragging a phantom box to select a group of events – what’s that called?) but I can draw in a complex set of events, even snap them to a reconfigurable grid, and then render a block of audio out by running every event through a given pippi instrument, just as if I’d played the sequence with a MIDI controller into astrid directly. Well, better since astrid does on-demand rendering per event, so the result of rendering a sequence with the piano roll has sample-accurate timing. Something I never cared too much about when performing with astrid, but is very nice to have for offline / non-realtime work where I often care very much about perfectly aligning events and segments with each other.

Aside: pippi is a python library for composing computer music. Astrid

is the interactive component which supports writing pippi scripts as

instruments, and then performing with them via a command interface, MIDI

I/O or through a custom zmq message protocol. Years ago it was just part

of pippi itself but when I threw out the python 2 version of pippi based

around byte strings as buffers to write the current python 3 version

with SoundBuffer classes that wrap memoryviews (among a

lot of other improvements and additions) I also threw out the

old fragile performance code. Astrid now still is fundamentally a

just-in-time rather than a hard realtime system – meaning all renders

(unless they are scheduled) are done on demand, and bring the latency of

the render overhead along with them. There’s a normal inner DSP loop –

I’m basing things around JACK now, so the usual JACK callback is where

buffers that have been queued to play get mixed together block-by-block,

and that’s all the callback does. It actually ends up being a pretty

stable approach, and once the render is complete playback is very

deterministic – a tight stream through all the buffers in motion at the

rate of the current JACK block size. In practice the latency has never

been an issue for me, and my approach to performance has long been more

of a conductor than a haptic instrumentalist so I’m not bothered by the

lack of tight sync to external I/O like sweeping a MIDI knob over a

filter. It’s quite possible to play a normal synth piano with a MIDI

controller without any noticeable latency on a pretty old thinkpad, and

if you are manipulating a stream of small grains, you can filter-sweep

in realtime to your heart’s content… but it’s not for everyone. It

really shines when you want to develop systems which play themselves,

helped along through a command interface or maybe a MIDI knob here or

there, which is what I’m most interested in.

My workflow for non-realtime pieces is basically to do everything with a series of pippi scripts though. The structure of the program isn’t really standard from project to project but there are some patterns I’ve noticed that I’ve started to repeat between projects.

Working backward, there’s almost always some kind of

mixture.py script which does the final assembly of all the

intermediate sections so far, and probably some additional processing to

each layer as a whole, and then finally the mixed output as a whole.

(Just minor mastering-type stuff like compression or limiting, or larger

scale mix automation on the tracks, etc.)

I tend to build the piece vertically in layers, a lot like you’d do in a traditional DAW – these channels for the drum tracks, these for the bass, etc. Except the channels are scripts which render intermediate WAV files into a stems directory and later get assembled by the mixture script into their final sequence & mixture. I’ll generally have some numbering or naming scheme for the variations that get rendered and through each layer mix and match my favorites – unless I’m working on something which is meant to be run from top to bottom for each render like Amber or any of the Unattended Computer pieces, etc.

Beyond that the specifics get tied very closely to the needs of whatever I’m working on.

My dream is to be able to coordinate much more granular blocks on a traditional DAW timeline, where I can choose from a pool of instrument scripts with the same interfaces and affordances as the current Astrid implementation, but optionally pin renders (probably with seed values) as blocks, just like a normal audio segment in a DAW, or compose sequences at the block level by diving into a piano roll GUI or a rhythm sequencing GUI just like reason. The major difference being of course that all of the blocks would be outputs of fluid scripts which can be regenerated on demand, or on every run, etc.

I started trying to think through a lot of this over the weekend – how it might look, what affordances it might have. Some thoughts I’ve arrived at so far:

Simple projects

The project format should be simple and easily allow its elements to be manipulated externally. A simple directory structure with human-readable text files for sequences and metadata, and clearly labeled PCM audio files for all intermediate blocks and renders.

This could maybe look something like:

/orc- containing individual instrument scripts whose filenames map to the names they can be called in the GUI, just like the astrid command interface./scores- obviously I’m stealing from csound with these names, but I don’t feel this division is restrictive. Score files here are the text versions of fragments which can be edited with the GUIs, but also referred to by name from any instrument script and used for further processing internally./blocks- individual renders of segments/blocks could be organized into sub-directories by instrument name, and contain basic timing / position data in the filename itself./stems- I’d also like to support a processing pipeline where each channel in the GUI can have a script callback to do processing on a full sequence of blocks. These would be cached by name here./stems/drums1.py- could for example be the post-processing script for an individual channel or channel group. I think it makes sense for these to have a difference interface and location than the core instruments but I’m not totally sure.mix.py- could be the final output script in the pipeline which would be fed all the stems for one last (optional) processing pass.

Modular interfaces

I want to approach the GUIs as individual pieces which can be composed together in the main DAW timeline GUI, or used ad hoc without having to create a full blown session. Just want to draw out a chorale passage that can be easily fed back into some arbitrary pippi script? Just fire up the piano roll and do it. Want a graphical interface for composing a short rhythm sequence that’s a little too complex for the built-in ASCII rhythms pippi uses to do cleanly? Fire up the rhythm sequencer GUI, etc.

On the same token I doubt I’ll have any integrated text editor GUI – I have no desire to reimplement vim, and probably other users will prefer to bring their own editors as well. So the GUIs should be able to easily find scripts, and watch them for changes – just like astrid does right now with its command interface.

Still making a full project should probably look something like

astrid new project <project-name> on the command

line, and launching GUIs something like astrid daw for the

main timeline, or astrid pianoroll for the pianoroll alone,

etc. Skeletons for new instruments would be nice too – maybe

astrid new instrument <instrument name> which could

create a simple instrument template like:

from pippi import dsp

def play(ctx):

out = dsp.buffer()

yield outForward

The pianoroll is a natural place to start, since I have the GUI begun already, and it’s the most desirable interface for the thing I’m working on now. I’m really excited to tackle the DAW part of this though, which I think will lead to some interesting possibilities on the macro level that I wouldn’t otherwise think of just working with my usual scripts, or a set of fixed-render blocks in Arduor.