double densed uncounthest hour of allbleakest age with a bad of wind and a barrel of rain

double densed uncounthest hour of allbleakest age with a bad of wind and a barrel of rain is an in-progress piece for resonators and brass. I’m keeping a composition log here as I work on it.

There are sure to be many detours. Getting it in shape might involve:

- more work on libpippi unit generators & integrating streams into astrid

- testing serial triggers with the solenoid tree & relay system built into the passive mixer

- finishing the passive mixer / relay system and firmware (what to do about the enclosure!?)

- general astrid debugging and quality-of-life improvements…

- composing maybe?

Tuesday March 26th

Here’s some random thinking about types of sound synthesis that interest me after work today… synthesis is a huge field. Digital synthesis especially has the promise of exploring the full spectrum of possible sounds. It’s the appeal and maybe the fallacy of digital synthesis that all sounds, if you just find the right way to modulate the speaker are possible. (Jliat’s All Possible CDs thought experiment is kind of the logical extreme of that line of thinking.)

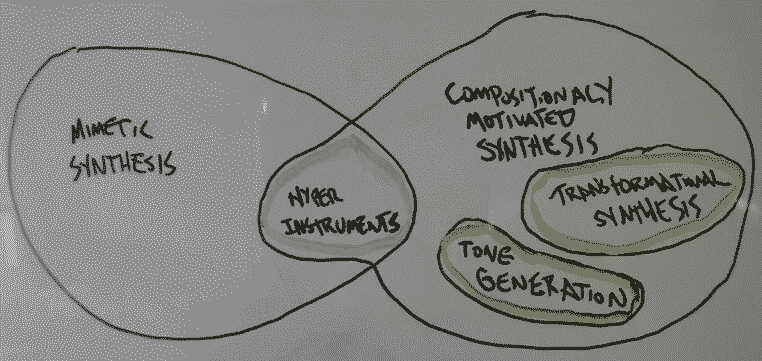

There are some areas of sound synthesis that I’m drawn to, the scribbly venn diagram shows them circled in green and grey. Mimetic synthesis probably has some other official name in the literature, I don’t remember how Luc Döbereiner refers to it, but it’s the other side of the coin in terms of compositionally motivated sound synthesis – a term of his I like a lot. Mimetic synthesis is just synthesis that tries to recreate sounds we know in the world. Particle synthesis with a physics engine to make glass breaking sounds, a complex FM patch to simulate the sound of a trumpet, interpolated multi-sampled recordings of a violin to simulate naturalistic violin playing, etc. Compositionally motivated sound synthesis is basically like it sounds: an attempt to build waveforms up from compositional processes and generational approaches to create unheard sounds that aren’t found in the world, or permuations of that sort of reaching for the unheard.

There’s lots of overlap in things like synthesizing impossible pianos with strings 7 miles long, or using something like modified waveguide synthesis to break and mutate a physical model of a natural instrument, like a guitar. I called those hyper-instruments in the diagram for lack of a better word, but I think that term might actually be more commonly used to refer to traditional instruments with digital or electronic augmentations, like a disklavier? Anyway here I’m thinking of compositionally motivated permutations of mimetic synthesis to put it in a really obtuse way.

That kind of hyper-instrument work is compelling to me compositionally, but the math is tough haha and I get a similar satisfaction from doing digital transformations to acoustic instruments that I’m handling in the world, so, I’m not honestly sure how far down that rabbit hole I care to go? Maybe if I get more comfortable with the math at some point, I’ll feel different. It’s a really cool area of work and I’m certainly interested to check things out as a listener.

Re-creating expressive versions of existing instruments doesn’t interest me all that much in instrument-building. It’s wonderful to be able to make sketches or sort of work with instruments that I can’t play or cannoodle someone else into playing haha – there are some amazing piano and guitar software instruments out there that are really fun to play with – but I usually prefer to work with my own shitty instrumentalism and the talents of my instrumentalist friends I guess.

The stuff that really interests me is in the realm of lets call transformational synthesis – compositionally motivated sound synthesis which takes inputs from the world and does things, then sends those things back out into the world again. All kinds of concatinative synthesis, waveset segmentation synthesis, granular synthesis and microsound fall under that umbrella as well as things like Paul Lansky’s synthesis of timbral families by warped linear prediction (which I’m studying again at the moment) and etc.

Somewhere in there is also simple tone generation and combination which makes a lovely counterpart to the typically wilder timbres of transformational synthesis.

I think it’s helping me consider what sort of things I should be spending time on with the ugen / stream interfaces in astrid. Like basic tone generation and probably a small collection of microsound-ish oscs seems like the territory I’d like to cover for rain.

These are the Daves I know, I know. These are the Daves I know.

The astrid / instrument-building update is that I’ve been stuck on a

memory alignment issue while refactoring the shared memory buffer system

to use normal libpippi lpbuffer_t structs instead of the

lpipc_buffer_t variants. The difference is kinda dumb:

lpbuffer_t has a pointer to the variable-sized data portion

with the samples, and the lpipc_buffer_t structs have a

flexible array member at the end instead. Using the flexible array

member (FAM) means only having to create and manage one shared memory

segment instead of two, which is why my lazy ass decided to go that way

at first and just make a slightly modified duplicate data structure for

shared memory buffer, but my implementation with the two memory segments

is breaking with memory alignment errors when trying to initialize the

second segment… I’m doing something goofy and I’m not sure what.

At the moment I’m just trying to understand the problem with the

two-segment implementation, but I’m starting to get interested in

exploring the trade-offs of just using the FAMs on normal

lpbuffer_t structs, too. Maybe that’s a nicer way to go

anyway, I haven’t thought the trade-offs through yet.

Friday March 22nd

I took the evening tonight to try to do some cleanup on the new

pippi.renderer module. The cython Instrument interface is a

little more like a wrapper for the lpinstrument_t struct,

which makes passing things around somewhat easier to think about. Just

like most of the C API takes a pointer to the

lpinstrument_t struct, the cython API (more often) takes a

reference to the Instrument to do things, and the cython

interface can call into the C API as it likes with the internal

reference to the lpinstrument_t struct. There was also a

weird leftover from earlier days that was loading the python renderer –

in other words the actual python instrument script which gets live

reloaded – externally and calling it an “instrument” confusingly…

I got a bit of a start on thinking through a revision to the way the ADC works… and ended up realizing the way it works already is probably fine, what I really want to be able to do is to create named buffers in shared memory from python ad hoc, like a sampler.

The current ADC interface creates a fixed-size ringbuffer and has interfaces for writing samples into it (locking the buffer during the writes) and reading samples from it. There’s a global name for the shared memory / semaphore pair because it was originally designed to run in a single ADC program.

It seems like the main thing to do is just to take a prefix for the name as a param and leave the rest as it is more or less. It’s a project for another night, but I’m really excited to get the ADC working again.

The ugen interface is also still in limbo pretty much. The way I’ve

implemented it so far is a bit backward, and I think I need to go back

and add the ugen features to the test C pulsar instrument first, and

then expose some APIs to cython that are similar to the current cython

ugen module interfaces.

The way I’m doing parameter updates to the graph is really clunky and weird. I talked through some ideas with Paul B recently who has been inspiring the C API of this project from the beginning (lots of his code has also been embedded in pippi for a long while!) and pointed me toward some nice ideas…

Tonight it also occurred to me something I’d really like to do with the stream interface is basically simple mixing: routing inputs to outputs without much processing.

For example the inputs of the soundcard to the inputs of an instrument’s internal ringbuffer, or the inputs of the soundcard to the outputs of a soundcard with some basic attenuation or something. The last time astrid was functional enough for performance I was using either a supercollider script or a PD patch to do that routing. It would be really nice to do it from within the scripts themselves.

Maybe it looks like a new mixer callback:

def mixer(ctx):

# mono passthrough

ctx.inputs(0).connect_to(ctx.outputs(0))

# use a param from the session context to control routing

if ctx.s.foo == 'bar':

ctx.inputs(1).connect_to(ctx.outputs(0))

else:

ctx.inputs(1).connect_to(ctx.outputs(1))Or something like that?

One of the things I was discussing with Paul was how I disliked writing connections like the above as function calls. It feels clunky and difficult to read when patches start to grow larger than trivial things. Like this test patch I wrote when developing the basic graph system is really hard for me to parse and understand:

from pippi import ugens

graph = ugens.Graph()

graph.add_node('s0b', 'sine', freq=0.1)

graph.add_node('s0a', 'sine', freq=0.1)

graph.add_node('s0', 'sine', freq=100)

graph.add_node('s1', 'sine')

graph.add_node('s2', 'sine')

graph.connect('s0b.output', 's0a.freq', 0.1, 200)

graph.connect('s0a.output', 's0.freq', 100, 300)

graph.connect('s0.output', 's1.freq', 0.1, 1)

graph.connect('s0.output', 'main.output', mult=0.1)

graph.connect('s1.output', 's2.freq', 60, 100)

graph.connect('s2.output', 'main.output', mult=0.2)

graph.connect('s2.freq', 's0b.freq', 0.15, 0.5, 60, 100)

out = graph.render(10)

out = fx.norm(out, 1).taper(0.1)

out.write('ugens_sine.wav')Okay “really hard” is relative, but it doesn’t feel like I can scan it and make sense of it at a glance the way that a graphical patch language shows the flow very clearly.

He’s getting me interested in exploring a forth-y DSL for this maybe, but I’m not really sure what that would look like at the moment. I’m inspired by how beautiful and small his sporth programs are. I have a really hard time with the stack-based approach, but it feels like once you learn to read it fluently, it could be a really beautiful thing to describe signal flows… The way it would actually look in an astrid script is unclear. Something nutty like this maybe:

from pippi import renderer

STREAM = """

# store melody in a table

_seq "0 5 0 3 0 3 5 0 3" gen_vals

# create beat and half-beat triggers

_half var _beat var

4 metro _half set

_half get 2 0 tdiv _beat set

# play melody with a sine wave

(_half get 0 _seq tseq 75 +) mtof 0.1 sine

# apply envelopes on beats and half-beats

_half get 0.001 0.1 0.2 tenvx *

_beat get 0.001 0.1 0.2 tenvx *

# add delay

dup (0.5 (4 inv) delay) (0.4 *) +

"""

# other callbacks and stuff

def play(ctx):

yield None

if __name__ == '__main__':

renderer.run_forever('sporthy')The idea being that instead of a callback you have a DSL string that gets evaluated on save. (That sporth patch is stolen from the interactive version of Paul’s sporth cookbook.)

It seems like an interesting thing to try in a future nim interface, too, since nim has very strong compile time metaprogamming abilities for creating DSLs… maybe a sporth-inspired frontend DSL?

I’ll probably deal with the clunky interfaces for the medium-to-long-term future, but that’s a good project to think about for later improvements.

Tuesday March 19th

Nice to feel like I’m making steady progress on this again. Triggers are triggering in the new python instruments once again!

I’m pretty excited to pick up on serial triggers again, and try out some synchronized motor/relay stuff now that this is all working again…

Monday March 18th

The python wrapper on top of the new C astrid instruments is still acting weirdly. I’m not handling keyboard interrupts properly and messages are getting passed to python in surprising ways sometimes…

Back and forth for a while but I settled on a basic pattern of:

- Handling all messages internally in a C thread, and relaying the appropriate ones to a new external message queue for python

- Python only handles the messages it really needs to handle:

LPMSG_PLAYwhich signals a python render (or also a C render if an async callback is defined on the instrument)LPMSG_TRIGGERwhich signals a python trigger callback: this is the main sequencer abstraction in astrid at the moment. this callback schedules future events.LPMSG_LOADthis message forces a load/reload of an instrument… but they always get reloaded if the source file changes so this maybe doesn’t really need to exist anymore, it’s a leftover from when astrid had a global reload toggle which if enabled would reload instruments on every new render, or wouldn’t…

Something goofy is still happening in the python layer, and I got a plain C instrument to crash on shutdown once! I’m feeling more encouraged about this though, it’s already fun to be able to play with the python instruments a little bit again.

Makes reaching for a python interface to the new unit generator graph feel possible, maybe, too.

Sunday March 17th

Fumbling once again to take a python unicode string and pass it to a

C function that wants char * this morning I figured I’d

write it down for next time.

The basic dance is to encode the string as bytes, store a reference

to this as a python object to prevent it from being garbage collected

and then assign it to a cdefed char * pointer

and the cython compiler takes the pointer from the bytes object and

passes to the C function, keeping the reference around as long as the

intermediary python object is alive. (So if the string needs to stay

around, malloc a copy first!)

def something(str a_unicode_string_from_python):

# keep a reference to the bytes object around to prevent garbage collection until the function returns

bytes_object_reference = a_unicode_string_from_python.encode('UTF-8')

# The cython compiler knows how to find the pointer to the internal string of the bytes object

cdef char * a_char_pointer = bytes_object_reference

# And now we have our char pointer

a_c_function_that_wants_a_char_pointer(a_char_pointer)

# After this point, the memory from the bytes object is freed so make a copy if the string

# needs to be long-lived beyond this point!

returnEdit Mar 20th: If anyone besides me is reading this, beware that this just passes unicode bytes happily along. It works fine for ASCII inputs, but if you use code points above the ASCII range that need more than one byte, it’ll get passed along as garbage unless the bytes are being explicitly decoded as unicode on the C side.

If ASCII is really all that’s needed, doing

a_unicode_string_from_python.decode('ascii', errors='replace').encode('utf-8')will first decode the unicode string as ASCII bytes and replace any multibyte characters with?(or useignoreto strip them out 1.) thenencode('utf-8')encodes them to bytes again before handing off to the cython compiler which gets the pointer to the underlying bytes and assigns that toa_char_pointer.

1. Or

xmlcharrefreplace if it makes sense: we used this to solve

a problem at work once where the mysql database tables could only handle

up to three byte codepoints. (If you’re a web person who used LAMP

stacks during the emoji revolution you might remember this problem!)

Using errors='xmlcharrefreplace' translated them into

harmless ASCII versions that could be rendered the same way by a

browser.

Saturday March 16th

The shape of astrid’s python renderer changed a fair bit today. Until

this morning the python renderer and a full python interpreter was

embedded inside the main astrid program. Working on wrapping the new

astrid_instrument_start and friends today I realized since

I’m opening a queue to send messages to the python interpreter anyway,

the renderer doesn’t really need to be running inside an embedded

environment. I moved the cyrenderer module into

pippi.renderer (which might just become astrid

eventually since that’s what it wraps) and changed the way instrument

scripts work slightly so that they can be run standalone as normal

python scripts.

For example here’s the simple.py script from yesterday

modified in the new style:

from pippi import dsp, oscs, renderer

def play(ctx):

ctx.log('Rendering simple tone')

yield oscs.SineOsc(freq=330, amp=0.5).play(1).env('pluckout')

# This is the new thing:

if __name__ == '__main__':

renderer.run_forever('simple', __file__)The renderer was already loading the script as a module, collecting

the play methods and executing them on demand, so adding a single line

to the python __main__ routine doesn’t interfere with

live-reloading at all, and lets the script initialize itself in the

background, load itself as a module and wait for messages instead of

relying on a special console.py runner like I’ve been doing

for ages.

In the process I decided that the old astrid dac,

adc, seq, and renderer programs

have run their course. They all live on embedded inside the

astrid_start_instrument routine which starts the jack

thread, the message listener thread and the sequencer thread – all of

which are started with renderer.run_forever from the python

script.

I haven’t yet figured out how to fit the new linenoise console into python instruments but I’d like them to feel the same as the C instruments and drop into an astrid console when started…

Friday March 15th

Getting closer to integrating the embedded python interpreter in the new style astrid C instruments. I’m working on removing redis which was used to send serialized buffers from the renderer processes to a single dac program for playback.

Instead of redis, I’m saving the serialized buffer as a shared memory

segment, and sending a new RENDER_COMPLETE message on the

instrument’s message queue with the address of the shared memory

segment. The receiver is the instrument message handler which

deserializes the buffer and places it into the scheduler for playback in

the jack callback. Those buffer IDs could be stored or passed around

elsewhere too though, like the LMDB session. I’m hoping this’ll make a

decent backend to build a shared sampler interface and built-in input

ringbuffers, etc.

Before that I had some embedded python quirks to work through. The

new PyConfig structure makes it pretty easy to configure an

embedded python interpreter that behaves like it’s the standard python

interpreter in whatever environment you happen to be in.

The last bit is important for astrid, since pippi isn’t a trivial

package to install (though once libpippi is more fully integrated that

situation should improve) and re-building pippi and its dependencies

(hello numpy!) along with the embedded interpeter is a much bigger pain

in the butt. It’s nicer to keep them seperate for now, and

PyConfig helps a whole lot with that.

Essentially the only crucial bit is to install pippi in a venv (or

system-wide if you know what you’re doing) and then run the astrid C

programs with the venv activated. PyConfig uses the venv

environment and the embedded interpreter acts just like it’s the normal

python installed in your system.

From the new linenoise repl: (^_- is the winky astrid

command prompt – also notice the play params get ignored since they’re

still only parsed in the cython layer at the moment)

^_- p foo=bar

msg: p

^_-The pulsar application gets the play message from the REPL and calls the internal cython interface to begin a render.

Mar 15 08:24:39 lake pulsar[514199]: MSG: pulsar

Mar 15 08:24:39 lake pulsar[514199]: MSG: 6 (msg.voice_id)

Mar 15 08:24:39 lake pulsar[514199]: MSG: 1 (msg.type)

Mar 15 08:24:39 lake pulsar[514199]: MSG: playThe embedded interpreter executes this python instrument script:

from pippi import dsp, oscs

def play(ctx):

ctx.log('Rendering simple tone')

yield oscs.SineOsc(freq=330, amp=0.5).play(1).env('pluckout')It logs a message from inside the python render process (the first

message is from the intermediary cython module layer which collects and

executes all the play methods in an instrument script, does

live reloading and etc).

Mar 15 08:24:39 lake astrid-pulsar[514199]: rendering event <cyrenderer.Instrument object at 0x797f174dcd00> w/params b''

Mar 15 08:24:39 lake astrid-pulsar[514199]: ctx.log[simple] Rendering simple toneIn the cython layer, the buffer is serialized and passed to an astrid

library routine which generates a buffer ID, saves the buffer string

into shared memory and sends the RENDER_COMPLETE message on

the pulsar message queue.

The pulsar message handler thread gets the message and logs that it arrived.

Mar 15 08:24:39 lake pulsar[514199]: MSG: pulsar

Mar 15 08:24:39 lake pulsar[514199]: MSG: 0 (msg.voice_id)

Mar 15 08:24:39 lake pulsar[514199]: MSG: 7 (msg.type)

Mar 15 08:24:39 lake pulsar[514199]: MSG: render complete

Mar 15 08:24:39 lake pulsar[514199]: pulsar-7-0-0-0-0-0-0-0Next up: deserialize the buffer string and place it into the scheduler. Should be way easier than the other parts of this process but my morning astrid time is over for today. :-)

Update: I made those changes after work today and… seems to be going ok?

Monday March 11th

I added a little serializer for size_t values and a

prefix that can be used as LMDB keys, or shared memory keys when storing

buffers.

When the python renderer has a buffer rendered it will:

- Serialize the buffer into a byte string the usual way

- Save the serialized buffer string into shared memory (or maybe with LMDB, I’m not sure of the trade-offs though) using the key generated from the same kind of counter used for voice ids

- Send the counter value / key over the buffer message queue

The thread that’s waiting for buffer messages will then:

- Use the key to look up the serialized buffer string in shared memory and get a pointer to it

- Deserialize the buffer and place it into the scheduler

- Clean up the shared memory holding the serialized copy of the buffer

As long as I only send the key over the buffer message queue once the shared memory has been released, it seems pretty safe to have the receiving thread clean up after the renderer.

Once I finish porting pippi’s SoundBuffers to be libpippi-backed rather than numpy-backed it should be possible to skip the serialization step and just put the pointer to the buffer into shared memory and hand it off without extra copies. Might make the python renderer slightly more responsive but I also never measured the latency added by serialization so who knows!

Sunday March 10th

Happy new time offset!

The pippi docs are back online.

Saturday March 9th

I’ve been treating this pulsar program as just a test for instrument building, but I decided to try rolling with it a bit today. Something in the trumpet going D - E - A - C against a sustained Bb into a slow E - A on the tenor sax might sound nice.

Boy howdy I need to work on my intonation. I think I feel OK with writing something simple and farely fixed that I can practice to muddle through on the trumpet, and probably save the more improv-y sections for trombone which I’m a bit more comfortable on…

There’s more plumbing work with the new astrid instruments to do for this still. On the list:

- Use

pselectto wait on shutdown signals, playq messages (that needs a better name – it’s the posix queue with a well-known name that the instrument mainly listens for messages on) and optionally serial messages. - Move the serial listener program into an optional feature of the astrid instrument. Maybe setting a path on the instrument struct to point to the tty in the filesystem is enough for now, eventually supporting multiple serial connections at once will be nice.

- Add support for the serialized buffer thread as an option for astrid instruments with an async renderer active to listen for serialized buffers arriving on Ye Olde Redis Pubsub. (Later to be replaced with LMDB, but that’s a project for another time.)

- Embed the python renderer. Point to a python script to load optionally as a start?

- Feed the old adc ringbuf

- Probably add a new stream ringbuf, so python renderers can take bits from the stream output to process.

- Possibly add some simple routing control for deciding how to mix the stream/async layers? Or just do it ad hoc in the stream callback?

- What does a python stream callback look like? (A lot of ugens orchestrating a graph prolly… also likely a project for another time…)

Thursday March 7th

Been busy at work and haven’t had a ton of energy for this project, but got a start this morning on getting things ready to incorporate antirez’s linenoise as a built-in command repl.

I made some revisions to the command types astrid supports:

q(quit) was previouslyk(kill) but does the same thing: clean shutdown.u(update) is still for param updates. this needs a new parameter parser which readskey=valuestyle strings and turns them into instrument or ugen param constants based on a lookup table. At the moment the message arrives empty, but triggers the update param callback in the instrument script.p(play) is still the command to execute an async render and schedule it for playback immediately. I was considering changing it tor(render) but play seems a little more intuitive?s(schedule) was previously (stop) for voice stopping, but that doesn’t make sense any more. Sequences are scheduled through the trigger callbacks now, not the older built-in looping abstractions. This is an alternate async render command which takes an onset time in the future and schedules the render so that the playback will begin as close to the requested time as possible. This also needs a new param parser in C as these things were previously parsed out in cython.t(trigger) executes the instrument’s trigger callback. In the cython version of this, the callback returned a list of events that were scheduled immediately. I’m still thinking about the C version. Could just be calls to the message scheduler with the samenowfrom inside the callback.m(message) is unimplemented, but inside the forthcoming instrument linenoise repl we could use it likem foo pto trigger a play message on the foo instrument. Needs some thinking though. The important bit here is just to provide a message type that the trigger callback can use to communicate on other instrument message queues.

For a moment I thought I had a deadlock on the message listening thread and the scheduler priority queue thread, but I was just accidently calling the shutdown routine twice, which tried to join on the same threads twice and created a deadlock.

Sunday March 3rd

Oh, look it is March!

The large allocation mystery ended up being sort of funny. Address Sanitizer and valgrind were pointing and shouting at the wavetable creation routine in the new pulsar osc saying that the attempt to create a wavetable with 4096 floats was allocating petabytes of memory. I still reach for print debugging first, which is ultimately what caused the confusion…

The argument to the allocator was a size_t value, and

I’m in the lazy habit of printing those values as ints when I want to

inspect them. (I guess typing the extra (int) cast seems

easier than remembering to write %ld instead of

%d? I don’t know why I started doing that, but this time

casting the mangled value to an int printed it as 4096, as

expected. Printing the value without casting sure enough showed the

giant number of bytes being requested.

The value was coming in through a function call that uses

va_args, and the size values were being read as

size_t… but I was calling the function with

ints, doh. This mangled the value somehow (the man pages

say weird things can happen, sure enough!) and attempted to allocate a

ridiculous amount of memory.

This whole experience made me like Address Sanitizer a lot, and it’s been helping find some other more minor issues today with its buddy valgrind.

Not getting a passing grade from valgrind anymore, but I’m down to a handful of issues to track down now! A couple look like they’re in the pipewire-jack implementation flagged as “possibly lost”, which is probably the pipewire devs doing clever things, but I’m hoping that’s not another rabbit hole waiting.

Looking forward to digging into an actual instrument script for rain. There’s more plumbing to do, I’d like to experiment with using LMDB as a sampler to store buffers that can be shared between instruments. I’m coming more and more around to the idea that I’d like to try to interact with the system through microphones and sensors mostly, with the input driven by a pile of trash/objects/toys/whatever plus brass… some kind of command interface is inevitable, but I’m feeling like it makes sense to minimize for this project and focus on the inputs/outputs to the system.

Here’s a little demo with the memory issues fixed again, not all that much to hear: there are 20 pulsar oscs spread across a parameter space tuned to a nice set of 12 pitches each enveloped by windows of various shapes. When the instrument gets a trigger message (through usb serial or some external thing, or another instrument) it runs the async render callback and puts the buffer into the scheduler – almost exactly like the python renderer, but skipping the serialization step since we can just pass pointers around when everything is in C-land.

Here’s the instrument program

#include "astrid.h"

#define NAME "pulsar"

#define SR 48000

#define CHANNELS 2

#define NUMOSCS (CHANNELS * 10)

#define WTSIZE 4096

#define NUMFREQS 12

lpfloat_t scale[] = {

55.000f,

110.000f,

122.222f,

137.500f,

146.667f,

165.000f,

185.625f,

206.250f,

220.000f,

244.444f,

275.000f,

293.333f

};

enum InstrumentParams {

PARAM_FREQ,

PARAM_FREQS,

PARAM_AMP,

PARAM_PW,

NUMPARAMS

};

typedef struct localctx_t {

lppulsarosc_t * oscs[NUMOSCS];

lpbuffer_t * ringbuf;

lpbuffer_t * env;

lpbuffer_t * curves[NUMOSCS];

lpfloat_t env_phases[NUMOSCS];

lpfloat_t env_phaseincs[NUMOSCS];

} localctx_t;

lpbuffer_t * renderer_callback(void * arg) {

lpbuffer_t * out;

lpinstrument_t * instrument = (lpinstrument_t *)arg;

localctx_t * ctx = (localctx_t *)instrument->context;

out = LPBuffer.cut(ctx->ringbuf, LPRand.randint(0, SR*20), LPRand.randint(SR, SR*10));

if(LPBuffer.mag(out) > 0.01) {

LPFX.norm(out, LPRand.rand(0.6f, 0.8f));

}

return out;

}

void audio_callback(int channels, size_t blocksize, float ** input, float ** output, void * arg) {

size_t idx, i;

int j, c;

lpfloat_t freqs[NUMFREQS];

lpfloat_t sample, amp, pw, saturation;

lpinstrument_t * instrument = (lpinstrument_t *)arg;

localctx_t * ctx = (localctx_t *)instrument->context;

if(!instrument->is_running) return;

for(i=0; i < blocksize; i++) {

idx = (ctx->ringbuf->pos + i) % ctx->ringbuf->length;

for(c=0; c < channels; c++) {

ctx->ringbuf->data[idx * channels + c] = input[c][i];

}

}

ctx->ringbuf->pos += blocksize;

amp = astrid_instrument_get_param_float(instrument, PARAM_AMP, 0.08f);

pw = astrid_instrument_get_param_float(instrument, PARAM_PW, 1.f);

astrid_instrument_get_param_float_list(instrument, PARAM_FREQS, NUMFREQS, freqs);

for(i=0; i < blocksize; i++) {

sample = 0.f;

for(j=0; j < NUMOSCS; j++) {

saturation = LPInterpolation.linear_pos(ctx->curves[j], ctx->curves[j]->phase);

ctx->oscs[j]->saturation = saturation;

ctx->oscs[j]->pulsewidth = pw;

ctx->oscs[j]->freq = freqs[j % NUMFREQS] * 4.f * 0.6f;

sample += LPPulsarOsc.process(ctx->oscs[j]) * amp * LPInterpolation.linear_pos(ctx->env, ctx->env_phases[j]) * 0.12f;

ctx->env_phases[j] += ctx->env_phaseincs[j];

if(ctx->env_phases[j] >= 1.f) {

// env boundries

}

while(ctx->env_phases[j] >= 1.f) ctx->env_phases[j] -= 1.f;

ctx->curves[j]->phase += ctx->env_phaseincs[j];

while(ctx->curves[j]->phase >= 1.f) ctx->curves[j]->phase -= 1.f;

}

for(c=0; c < channels; c++) {

output[c][i] += (float)sample * 0.5f;

}

}

}

int main() {

lpinstrument_t instrument = {0};

lpfloat_t selected_freqs[NUMFREQS] = {0};

// create local context struct

localctx_t * ctx = (localctx_t *)calloc(1, sizeof(localctx_t));

if(ctx == NULL) {

printf("Could not alloc ctx: (%d) %s\n", errno, strerror(errno));

exit(1);

}

// create env and ringbuf

ctx->env = LPWindow.create(WIN_HANNOUT, 4096);

ctx->ringbuf = LPBuffer.create(SR * 30, CHANNELS, SR);

// setup oscs and curves

for(int i=0; i < NUMOSCS; i++) {

ctx->env_phases[i] = LPRand.rand(0.f, 1.f);

ctx->env_phaseincs[i] = (1.f/SR) * LPRand.rand(0.001f, 0.1f);

ctx->curves[i] = LPWindow.create(WIN_RND, 4096);

ctx->oscs[i] = LPPulsarOsc.create(2, 2, // number of wavetables, windows

WT_SINE, WTSIZE, WT_TRI2, WTSIZE, // wavetables and sizes

WIN_SINE, WTSIZE, WIN_HANN, WTSIZE // windows and sizes

);

ctx->oscs[i]->samplerate = (lpfloat_t)SR;

ctx->oscs[i]->wavetable_morph_freq = LPRand.rand(0.001f, 0.15f);

ctx->oscs[i]->phase = 0.f;

}

// Set the stream and async render callbacks

instrument.callback = audio_callback; // FIXME call this `stream` maybe, or `ugens`?

instrument.renderer = renderer_callback;

if(astrid_instrument_start(NAME, CHANNELS, (void*)ctx, &instrument) < 0) {

printf("Could not start instrument: (%d) %s\n", errno, strerror(errno));

exit(1);

}

/* populate initial freqs */

for(int i=0; i < NUMFREQS; i++) {

selected_freqs[i] = scale[LPRand.randint(0, NUMFREQS*2) % NUMFREQS] * 0.5f + LPRand.rand(0.f, 1.f);

}

astrid_instrument_set_param_float_list(&instrument, PARAM_FREQS, selected_freqs, NUMFREQS);

while(instrument.is_running) {

astrid_instrument_tick(&instrument);

// Respond to param update messages -- TODO: parse param args with PARAM_ consts

if(instrument.msg.type == LPMSG_UPDATE) {

syslog(LOG_DEBUG, "MSG: update | %s\n", instrument.msg.msg);

for(int i=0; i < NUMFREQS; i++) {

selected_freqs[i] = scale[LPRand.randint(0, NUMFREQS*2) % NUMFREQS] * 0.5f + LPRand.rand(0.f, 1.f);

}

astrid_instrument_set_param_float_list(&instrument, PARAM_FREQS, selected_freqs, NUMFREQS);

astrid_instrument_set_param_float(&instrument, PARAM_AMP, LPRand.rand(0.5f, 1.f));

astrid_instrument_set_param_float(&instrument, PARAM_PW, LPRand.rand(0.05f, 1.f));

}

}

for(int o=0; o < NUMOSCS; o++) {

LPPulsarOsc.destroy(ctx->oscs[o]);

LPBuffer.destroy(ctx->curves[o]);

}

LPBuffer.destroy(ctx->ringbuf);

LPBuffer.destroy(ctx->env);

free(ctx);

printf("Done!\n");

return 0;

}Log February 2024

Log January 2024

Log December 2023